arrow_back_ios

See All Products

See All Knowledge

See All Solutions

See All Services & Support

See All About

See All Contact

Main Menu

arrow_back_ios

See All Acoustic End-of-Line Test Systems

See All DAQ and instruments

See All Electroacoustics

See All Software

See All Transducers

See All Vibration Testing Equipment

See All Academy

See All Resource Center

See All Applications

See All Industries

See All Insights

See All Services

See All Support

See All Our Business

See All Our History

See All Our Sustainability Commitment

See All Global Presence

Main Menu

- Accessories for Acoustic End-of-Line Test Systems

- Actuators

- Combustion Engines

- Durability

- eDrive

- Mobile Systems

- Production Testing Sensors

- Transmission & Gearboxes

- Turbo Charger

- DAQ Systems

- High Precision and Calibration Systems

- Industrial electronics

- Power Analyser

- S&V Hand-held devices

- S&V Signal conditioner

- Head and torso simulators (HATS)

- Artificial ears

- Electroacoustic hardware

- Bone conduction

- Electoacoustic software

- Pinnae

- Accessories

- DAQ Software

- Drivers & API

- nCode - Durability and Fatigue Analysis

- ReliaSoft - Reliability Analysis and Management

- Test Data Management

- Utility

- Vibration Control

- Acoustic

- Current / voltage

- Displacement

- Force sensors

- Load Cells

- Multi Component Sensors

- Pressure

- Strain Gauges

- Strain Sensors

- Temperature Sensors

- Tilt Sensors

- Torque

- Vibration

- Temperature Sensors

- LDS Shaker Systems

- Power Amplifiers

- Vibration Controllers

- Measurement Exciters

- Modal Exciters

- Accessories for Vibration Testing Equipment

- Articles

- Case Studies

- Recorded Webinars

- Presentations

- Primers and Handbooks

- Videos

- Whitepapers

- Search all resources

- Acoustics

- Asset & Process Monitoring

- Custom Sensors

- Data Acquisition & Analysis

- Durability & Fatigue

- Electric Power Testing

- NVH

- Reliability

- Smart Sensors

- Vibration

- Virtual Testing

- Weighing

- Aerospace & Defence

- Audio

- Automotive & Ground Transportation

- Energy

- Robotics

- Vibration testing - Industries

- Powering the Future of Electrification

- Powering Productivity with Automation

- Accelerate Innovation with Digitisation

- Calibration

- HBK Assured Service Contracts

- Installation, Maintenance & Repair

- Materials Characterisation & Test Lab - AMCT

arrow_back_ios

See All Actuators

See All Combustion Engines

See All Durability

See All eDrive

See All Transmission & Gearboxes

See All Turbo Charger

See All DAQ Systems

See All High Precision and Calibration Systems

See All Industrial electronics

See All Power Analyser

See All S&V Hand-held devices

See All S&V Signal conditioner

See All Accessories

See All DAQ Software

See All Drivers & API

See All nCode - Durability and Fatigue Analysis

See All ReliaSoft - Reliability Analysis and Management

See All Test Data Management

See All Utility

See All Vibration Control

See All Acoustic

See All Current / voltage

See All Displacement

See All Load Cells

See All Pressure

See All Strain Gauges

See All Torque

See All Vibration

See All LDS Shaker Systems

See All Power Amplifiers

See All Vibration Controllers

See All Accessories for Vibration Testing Equipment

See All Training Courses

See All Whitepapers

See All Acoustics

See All Asset & Process Monitoring

See All Custom Sensors

See All Data Acquisition & Analysis

See All Durability & Fatigue

See All Electric Power Testing

See All NVH

See All Reliability

See All Smart Sensors

See All Vibration

See All Weighing

See All Automotive & Ground Transportation

See All Calibration

See All Installation, Maintenance & Repair

See All Support Brüel & Kjær

See All Release Notes

See All Compliance

See All Our People

Main Menu

- Bridge Calibration Units

- Microphone Calibration System

- Sound Level Meter Calibration System

- Strain Gauge Precision Instrument

- Vibration Transducer Calibration System

- Accessories

- BK Connect / Pulse

- catman Enterprise

- catmanEasy / AP

- Software Downloads for Perception / Genesis HighSpeed

- Tescia

- ReliaSoft BlockSim

- ReliaSoft Cloud

- ReliaSoft Lambda Predict

- ReliaSoft Product Suites

- ReliaSoft RCM++

- ReliaSoft XFMEA

- ReliaSoft XFRACAS

- ReliaSoft Weibull++

- Classical Shock

- Random

- Random-On-Random

- Shock Response Spectrum Synthesis

- Sine-On-Random

- Time Waveform Replication

- Vibration Control Software

- Microphone Sets

- Microphone Cartridges

- Acoustic Calibrators

- Hydrophones

- Special Microphones

- Microphone Pre-amplifiers

- Sound Sources

- Accessories for acoustic transducers

- CCLD (IEPE) accelerometers

- Charge Accelerometers

- Fiber Optic Accelerometers

- Force transducers

- Reference accelerometers

- Impulse hammers / impedance heads

- Tachometer Probes

- Vibration Calibrators

- Cables

- Accessories

- High-Force LDS Shakers

- Medium-Force LDS Shakers

- Low-Force LDS Shakers

- Permanent Magnet Shakers

- Shaker Equipment / Slip Tables

- Acoustics and Vibration

- Asset & Process Monitoring

- Data Acquisiton

- Electric Power Testing

- Fatigue and Durability Analysis

- Mechanical Test

- Reliability

- Weighing

- Electroacoustics

- Noise Source Identification

- Environmental Noise

- Sound Power and Sound Pressure

- Noise Certification

- Acoustic Material Testing

- OEM Custom Sensor Assemblies for eBikes

- OEM Custom Sensor Assemblies for Agriculture Industry

- Custom Sensor Assemblies for Robotic OEM

- OEM Custom Sensor Assemblies for Medical

- Automotive Structural Durability and Fatigue Testing

- Durability Simulation & Analysis

- Material Fatigue Characterisation

- Electrical Devices Testing

- Electrical Systems Testing

- Grid Testing

- High-Voltage Testing

- End-of-Line (EoL) & Durability Testing

- Process Weighing

- Sorting and Batching Solutions

- Scale Manufacturing Solutions

- Vehicle Scale Solutions

- Filling, Dosing and Checkweighing Control

arrow_back_ios

See All CANHEAD

See All GenHS

See All LAN-XI

See All MGCplus

See All Optical Interrogators

See All QuantumX

See All SomatXR

See All Fusion-LN

See All Accessories

See All Hand-held Software

See All Accessories

See All BK Connect / Pulse

See All API

See All Microphone Sets

See All Microphone Cartridges

See All Acoustic Calibrators

See All Special Microphones

See All Microphone Pre-amplifiers

See All Sound Sources

See All Accessories for acoustic transducers

See All Accessories

See All Experimental testing

See All Transducer Manufacturing (OEM)

See All Accessories

See All Non-rotating (calibration)

See All Rotating

See All CCLD (IEPE) accelerometers

See All Charge Accelerometers

See All Impulse hammers / impedance heads

See All Cables

See All Accessories

See All Electroacoustics

See All Noise Source Identification

See All Environmental Noise

See All Sound Power and Sound Pressure

See All Noise Certification

See All Industrial Process Control

See All Structural Health Monitoring

See All Electrical Devices Testing

See All Electrical Systems Testing

See All Grid Testing

See All High-Voltage Testing

See All Vibration Testing with Electrodynamic Shakers

See All Structural Dynamics

See All Machine Analysis and Diagnostics

See All Process Weighing

See All Calibration Services for Transducers

See All Calibration Services for Handheld Instruments

See All Calibration Services for Instruments & DAQ

See All On-Site Calibration

See All Resources

See All Software License Management

Main Menu

- Housing

- Communication processor

- Amplifier modules

- Connection boards

- Special function modules

- Accessories

- Free-field Microphone Cartridges

- Pressure-field Microphone Cartridges

- Diffuse-field Microphone Cartridges

- Binaural Audio

- Outdoor microphones

- Probe Microphones

- Sound intensity probes

- Surface microphone

- Array microphones

- Other special microphone

- Production line test

- Microphone cables

- Tripods

- Microphone booms

- Microphone adapters

- Electroacoustic actuators

- Microphone Windscreens

- Nose cones

- Microphone holders

- Tripods

- Other accessories for acoustic transducers

- Microphone outdoor protection

- Measurement Cables

- Ground cables

- Thrust pieces

- Bearings

- Load feet

- Base plates

- Knuckle eyes

- Adapters

- Mouting aids and others

- Adhesives

- Protective coatings

- Cleaning material

- SG Kits

- Solder terminals

- Other

- Cables

- ZeroPoint Balancing

- TCS balancing

- TCO balancing

- Magnets

- Mounting clips/bases

- Studs, screws and washers

- Adhesives/Tools

- Adapters

- Mechanical filters

- Other accessories

- Testing Of Hands-Free Devices

- Smart Speaker Testing

- Speaker Testing

- Hearing Aid Testing

- Headphone Testing

- Telephone Headset And Handset Testing

- Acoustic Holography

- Acoustic Signature Management

- Underwater Acoustic Ranging

- Wind Tunnel Acoustic Testing – Aerospace

- Wind Tunnel Testing For Cars

- Beamforming

- Flyover Noise Source Identification

- Real-Time Noise Source Identification With Acoustic Camera

- Sound Intensity Mapping

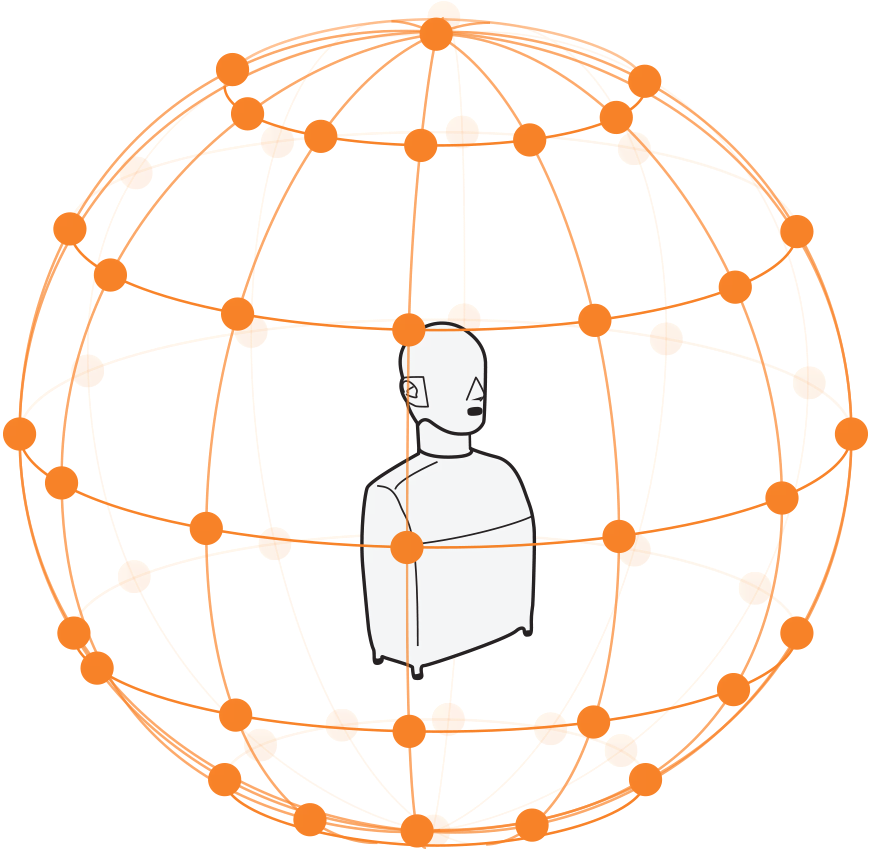

- Spherical Beamforming

- Product Noise

- Shock and Drop Testing

- Environmental Stress Screening - ESS

- Package Testing

- Buzz, Squeak and Rattle (BSR)

- Mechanical Satellite Qualification - Shaker Testing

- Operating Deflection Shapes (ODS)

- Classical Modal Analysis

- Ground Vibration Test (GVT)

- Operational Modal Analysis (OMA)

- Structural Health Monitoring (SHM)

- Test-FEA Integration

- Shock Response Spectrum (SRS)

- Structural Dynamics Systems

- Force Calibration

- Torque Calibration

- Microphones & Preamplifiers Calibration

- Accelerometers Calibration

- Pressure Calibration

- Displacement Sensor Calibration

- Sound Level Meter Calibration

- Sound Calibrator & Pistonphone Calibration

- Vibration Meter Calibration

- Vibration Calibrator Calibration

- Noise Dosimeter Calibration

- QuantumX Calibration

- Genesis HighSpeed Calibration

- Somat Calibration

- Industrial Electronics Calibration

- LAN-XI Calibration