arrow_back_ios

Main Menu

arrow_back_ios

Main Menu

- Mechanical & structural DAQ systems

- Sound & Vibration DAQ systems

- Industrial electronics

- Simulator Systems

- Electric power analyzers

- S&V Handheld devices

- Wireless DAQ Systems

- DAQ

- Drivers API

- nCode - Durability and Fatigue Analysis

- ReliaSoft - Reliability Analysis and Management

- Test Data Management

- Utility

- Vibration Control

- Inertial Sensor Software

- Sensor Finder

- Acoustic

- Current / voltage

- Displacement

- Exciters

- Force

- Inertial Sensors

- Load cells

- Multi Component

- Pressure

- Smart Sensors with IO-Link interface

- Strain

- Optical Temperature Sensors

- Tilt Sensors

- Torque

- Vibration

- OEM Custom Sensors

- Calibration Services for Transducers

- Calibration Services for Handheld Instruments

- Calibration Services for Instruments & DAQ

- On-Site Calibration

- Resources

- Articles

- Case Studies

- Recorded Webinars

- Primers and Handbooks

- Videos

- Whitepapers

- Search all resources

- Acoustics

- Data Acquisition & Analysis

- Durability & Fatigue

- Electric Power Testing

- Industrial Process Automation

- Machine automation control and navigation

- NVH

- Reliability

- Smart Sensors

- Structural Health Monitoring

- Vibration

- Virtual Testing

- Weighing

- Road Load Data Acquisition

arrow_back_ios

Main Menu

- QuantumX

- SomatXR

- MGCplus

- Optical Interrogators

- CANHEAD

- eDAQ

- Strain Gauge precision instrument

- Bridge calibration units

- GenHS

- LAN-XI

- Fusion-LN

- CCLD (IEPE) signal conditioner

- Charge signal conditioner

- Microphone signal conditioner

- NEXUS

- Microphone calibration system

- Vibration transducer calibration system

- Sound level meter calibration system

- Accessories for signal conditioner

- Accessories for calibration systems

- Multi channel amplifier

- Single channel amplifier and amplifier with display

- Weighing indicators

- Weighing electronics

- Accessories for industrial electronics

- eDrive power analyzer

- eDrive Package - Remote Probe based

- eGrid power analyzer

- GenHS

- Accessories for power analyzer and GenHS

- BK Connect / PULSE

- Tescia

- Catman data acquisition software

- Thousands of Channels at a Glance

- Perception – High speed data acquisition software

- Drivers for compatibility with third party software

- ReliaSoft BlockSim

- ReliaSoft Cloud

- ReliaSoft Lambda Predict

- ReliaSoft MPC

- ReliaSoft Product Suites

- ReliaSoft RCM++

- ReliaSoft XFMEA

- ReliaSoft XFRACAS

- ReliaSoft Weibull++

- Classical Shock

- Random

- Random-On-Random

- Shock Response Spectrum Synthesis

- Sine-On-Random

- Time Waveform Replication

- Vibration Control Software

- Microphone sets

- Cartridges

- Reference microphones

- Special microphones

- Acoustic material testing kits

- Acoustic calibrators

- Hydrophones

- Microphone Pre-amplifiers

- Sound Sources

- Accessories for acoustic transducers

- Fiber Optic Technology

- Inductive technology

- Strain gauge technology

- Accessories for displacement sensors

- Inertial Measurement Units (IMU)

- Vertical Reference Units (VRU)

- Attitude and Heading Reference Systems (AHRS)

- Inertial Navigation Systems (INS)

- Inertial Sensor Accessories

- Bending / beam

- Canister

- Compression

- Single Point

- Tension

- Weighing modules

- Digital load cells

- Accessories for load cells

- Strain gauges for experimental testing

- Fiber optical strain gauges

- Strain gauges for transducer manufacturing (OEM)

- Strain sensors

- Accessories for strain gauges

- Accelerometers

- Force transducers

- Impulse hammers / impedance heads

- Tachometer probes

- Vibration calibrators

- Cables

- Accessories

- Force - OEM custom sensors

- Torque - OEM custom sensors

- Load - OEM custom sensors

- Multi-Axis - OEM custom Sensors

- Pressure - OEM custom sensors

- HATS (Head and torso simulator)

- Artificial Ear

- Electroacoustic hardware

- Bone conduction

- Electroacoustic software

- Pinnae

- Accessories for electroacoustic application

- Accessories

- Actuators

- Combustion Engines

- Durability

- eDrive

- Mobile Systems

- Production Testing Sensors

- Transmission Gearboxes

- Turbo Charger

- Force Calibration

- Torque Calibration

- Microphones & Preamplifiers Calibration

- Accelerometers Calibration

- Pressure Calibration

- Displacement Sensor Calibration

- Sound Level Meter Calibration

- Sound Calibrator & Pistonphone Calibration

- Vibration Meter Calibration

- Vibration Calibrator Calibration

- Noise Dosimeter Calibration

- QuantumX Calibration

- Genesis HighSpeed Calibration

- Somat Calibration

- Industrial Electronics Calibration

- LAN-XI Calibration

- Guidelines on Calibration Intervals

- Certificate Samples

- Interactive Calibration Certificate

- Download of Calibration Certificates

- Acoustics and Vibration

- Asset & Process Monitoring

- Data Acquisiton

- Electric Power Testing

- Fatigue and Durability Analysis

- Mechanical Test

- Reliability

- Weighing

- Electroacoustics

- Noise Source Identification

- Handheld S&V measurements

- Sound Power and Sound Pressure

- Noise Certification

- Acoustic Material Testing

- Structural Durability and Fatigue Testing

- Durability Simulation & Analysis

- Material Fatigue Characterisation

- Electrical Devices Testing

- Electrical Systems Testing

- Grid Testing

- High-Voltage Testing

- End of Line Durability Testing

- Vibration Testing with Electrodynamic Shakers

- Structural Dynamics

- Machine Analysis and Diagnostics

- Troubleshooting Analyser

- Naval Certification and Sea-Trials

- Process Weighing

- Sorting and Batching Solutions

- Scale Manufacturing Solutions

- Vehicle Scale Solutions

- Filling, Dosing and Checkweighing Control

arrow_back_ios

Main Menu

- Housing

- Communication processor

- Amplifier modules

- Connection boards

- Special function modules

- Accessories for MGCplus

- Binaural Audio

- Outdoor microphones

- Probe Microphones

- Sound intensity probes

- Surface microphone

- Array microphones

- Other special microphones

- Microphones for production line test

- Probe microphones

- Microphone Cables

- Tripods

- Microphone booms

- Microphone adapters

- Electrostatic actuators

- Microphone windscreen

- Nose cones

- Microphone holders

- Other accessories for acoustic transducers

- Microphones outdoor protection

- Rod end bearings

- Tensile load introductions

- Thrust pieces and load buttons

- Cables and connectors

- Screw sets

- Load bases / Tensile compressive adapters

- Measurement cables

- Ground cables

- Thrust pieces

- Bearings

- Load feet

- Base plates

- Knuckle eyes

- Adapters

- Mounting aids and others

- Adhesives

- Protective coatings

- Cleaning material

- SG Kits

- Solder terminals

- Other strain gauge accessories

- Cables

- ZeroPoint Balancing

- TCS Balancing

- TC0 Balancing

- Magnets

- Mounting clips/bases

- Studs, screws and washers

- Adhesives/Tools

- Adapters

- Mechanical filters

- Other accessories

- High-Force LDS Shakers

- Medium-Force LDS Shakers

- Low-Force LDS Shakers

- Permanent Magnet Shakers

- Shaker Equipment / Slip Tables

- Testing Of Hands-Free Devices

- Smart Speaker Testing

- Speaker Testing

- Hearing Aid Testing

- Headphone Testing

- Soundbar Testing

- Telephone Headset And Handset Testing

- Acoustic Holography

- Acoustic Signature Management

- Underwater Acoustic Ranging

- Wind Tunnel Acoustic Testing – Aerospace

- Wind Tunnel Testing For Cars

- Beamforming

- Flyover Noise Source Identification

- Real-Time Noise Source Identification With Acoustic Camera

- Sound Intensity Mapping

- Spherical Beamforming

- Product Noise

- HighVoltage HighPower Switchgear Tests

- Transformer Testing

- Current Zero Testing

- Circuit Breaker Testing

- Messung der Unrundheit von Eisenbahnrädern

- On-Board Measurement

- Pantograph and Overhead Lines Monitoring

- Wayside Train Monitoring & Measurement

- Shock and Drop Testing

- Environmental Stress Screening - ESS

- Package Testing

- Buzz, Squeak and Rattle (BSR)

- Mechanical Satellite Qualification - Shaker Testing

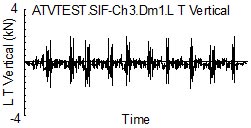

Figure 1. Ten laps of test track

Despite being a carefully controlled test track and a single driver, the variability in loading during each lap is easily seen in the figure.

From a fatigue damage perspective, is the data in Fig. 1 enough to characterize the service usage for the expected life of the component? This question can be answered by using the variability in the measured loading history to project what the expected fatigue damage and loading history would be if the loads were measured for longer time.

Analyzing the variability in the time domain for functions of load and time is not feasible or needed. In fatigue, the rainflow histogram of the loads is of more interest than the loading history itself since fatigue damage is computed from it.

Figure 1. Ten laps of test track

Despite being a carefully controlled test track and a single driver, the variability in loading during each lap is easily seen in the figure.

From a fatigue damage perspective, is the data in Fig. 1 enough to characterize the service usage for the expected life of the component? This question can be answered by using the variability in the measured loading history to project what the expected fatigue damage and loading history would be if the loads were measured for longer time.

Analyzing the variability in the time domain for functions of load and time is not feasible or needed. In fatigue, the rainflow histogram of the loads is of more interest than the loading history itself since fatigue damage is computed from it.

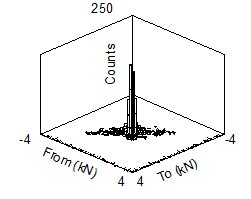

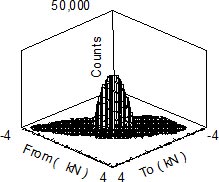

Figure 2. Rainflow histogram

Two dimensional rainflow histograms contain information about both the load ranges and means. Figure 2 shows the rainflow histogram of the data from Fig. 1 in a To-From format. This type of histogram preserves mean stress effects which are usually important for fatigue analysis.

Data from Fig. 2 is plotted as a cumulative exceedance diagram in Fig. 3.

Figure 2. Rainflow histogram

Two dimensional rainflow histograms contain information about both the load ranges and means. Figure 2 shows the rainflow histogram of the data from Fig. 1 in a To-From format. This type of histogram preserves mean stress effects which are usually important for fatigue analysis.

Data from Fig. 2 is plotted as a cumulative exceedance diagram in Fig. 3.

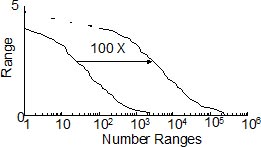

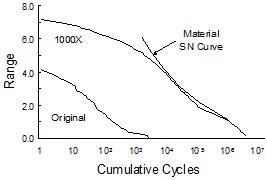

Figure 3. Exceedance diagram

The exceedance curve for the original loading history is shown on the left side of the diagram. If the test data were measured for 1000 laps (100 times longer), the distribution would be expected to be shifted to the right and have a similar shape. The dashed lines schematically show that higher loads would be expected for longer times. Although the exceedance diagram is easier to visualize, it looses valuable information about mean effects.

In this paper we show how the variability in a measured rainflow histogram can be used to estimate the expected rainflow histogram for longer times. This extrapolated histogram can then be used to reconstruct a new longer time history for testing or analysis.

Rainflow Extrapolation

Extrapolation of rainflow histograms was first proposed by Dressler [1]. A short description of the concept is given here. Readers are referred to reference 1 for the details. The rainflow histogram is treated as a two dimensional probability distribution. A simple probability density function could be obtained by dividing the number of cycles in each bin of the histogram by the total number of cycles. A new histogram corresponding to any number of total cycles can be constructed by randomly placing cycles in the histogram according to their probability of occurrence. However, this approach will be essentially the same as multiplying the cycles in the histogram by an extrapolation factor. But this is unrealistic. Even the same driver over the same test track cannot repeat the loading history. For example each time a driver hits a pot hole the loads will be somewhat different. This is clearly shown in the ten laps in Fig. 1. The peak load from a particular event will be placed into an individual bin in the rainflow histogram. During subsequent laps, this load will have a different maximum value and be placed into a bin in the same neighborhood as the first lap.

Figure 3. Exceedance diagram

The exceedance curve for the original loading history is shown on the left side of the diagram. If the test data were measured for 1000 laps (100 times longer), the distribution would be expected to be shifted to the right and have a similar shape. The dashed lines schematically show that higher loads would be expected for longer times. Although the exceedance diagram is easier to visualize, it looses valuable information about mean effects.

In this paper we show how the variability in a measured rainflow histogram can be used to estimate the expected rainflow histogram for longer times. This extrapolated histogram can then be used to reconstruct a new longer time history for testing or analysis.

Rainflow Extrapolation

Extrapolation of rainflow histograms was first proposed by Dressler [1]. A short description of the concept is given here. Readers are referred to reference 1 for the details. The rainflow histogram is treated as a two dimensional probability distribution. A simple probability density function could be obtained by dividing the number of cycles in each bin of the histogram by the total number of cycles. A new histogram corresponding to any number of total cycles can be constructed by randomly placing cycles in the histogram according to their probability of occurrence. However, this approach will be essentially the same as multiplying the cycles in the histogram by an extrapolation factor. But this is unrealistic. Even the same driver over the same test track cannot repeat the loading history. For example each time a driver hits a pot hole the loads will be somewhat different. This is clearly shown in the ten laps in Fig. 1. The peak load from a particular event will be placed into an individual bin in the rainflow histogram. During subsequent laps, this load will have a different maximum value and be placed into a bin in the same neighborhood as the first lap.

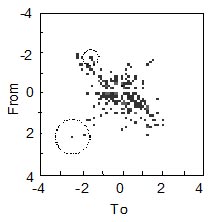

Figure 4. Histogram variability

Figure 4 shows a rainflow histogram in a two dimensional view. Consider the event going from 2 to –2.5. Next time this is repeated it will be somewhere in the neighborhood of ( 2, -2.5 ) indicated by the large dashed circle. There is not much data in this region of the histogram and considerable variability is expected. Next consider the cycles from –2 to –1.5. Here there is much more data and we would expect the variability to be much smaller as indicated by the small dashed circle. Extrapolating rainflow histograms is essentially a task of finding a two dimensional probability distribution function from the original rainflow data.

For a given set of data taken from a continuous population, X, there are many ways to construct a probability distribution of the data. There are two general classes of probability density estimates: parametric and non-parametric. In parametric density estimation, an assumption is made that the given data set will fit a pre-determined theoretical probability distribution. The shape parameters for the distribution must be estimated from the data. Non-parametric density estimators make no assumptions about the distribution of the entire data set. A histogram is a non-parametric density estimator. For extrapolation purposes, we wish to convert the discrete points of a histogram into a continuous probability density. Kernal estimators [2-3] provide a convenient way to estimate the probability density. The method can be thought of as fitting an assumed probability distribution to a local area of the histogram. The size of the local area is determined by the bandwidth of the estimator. This is indicated by the size of the circle in Fig. 4. An adaptive bandwidth for the kernal is determined by how much data is in the neighborhood of the point being considered.

Statistical methods are well developed for regions of the histogram where there is a lot of data. Special considerations are needed for sparsely populated regions [4]. The expected maximum load range for the extrapolated histogram is estimated from a weibull fit to the large amplitude load ranges. This estimate is then used to determine an adaptive bandwidth for the sparse data regions of the histogram. Once the adaptive bandwidth is determined, the probability density of the entire histogram can be computed. The expected histogram for any desired total number of cycles is constructed by randomly placing cycles in the histogram with the appropriate probability. It should be noted that this process does not produce a unique extrapolation. Many extrapolations can be done with the same extrapolation factor so some information about the variability of the resulting loading history can be obtained.

Results for a 1000 times extrapolation of the loading history are shown in Fig. 5. It is easier to visualize the results of the extrapolation when the results are viewed in terms of the exceedance diagram given in Fig. 6. Two plots are shown in the figure. One from the data in Fig. 5 and another one representing a 1000 repetitions of the original loading history.

Figure 4. Histogram variability

Figure 4 shows a rainflow histogram in a two dimensional view. Consider the event going from 2 to –2.5. Next time this is repeated it will be somewhere in the neighborhood of ( 2, -2.5 ) indicated by the large dashed circle. There is not much data in this region of the histogram and considerable variability is expected. Next consider the cycles from –2 to –1.5. Here there is much more data and we would expect the variability to be much smaller as indicated by the small dashed circle. Extrapolating rainflow histograms is essentially a task of finding a two dimensional probability distribution function from the original rainflow data.

For a given set of data taken from a continuous population, X, there are many ways to construct a probability distribution of the data. There are two general classes of probability density estimates: parametric and non-parametric. In parametric density estimation, an assumption is made that the given data set will fit a pre-determined theoretical probability distribution. The shape parameters for the distribution must be estimated from the data. Non-parametric density estimators make no assumptions about the distribution of the entire data set. A histogram is a non-parametric density estimator. For extrapolation purposes, we wish to convert the discrete points of a histogram into a continuous probability density. Kernal estimators [2-3] provide a convenient way to estimate the probability density. The method can be thought of as fitting an assumed probability distribution to a local area of the histogram. The size of the local area is determined by the bandwidth of the estimator. This is indicated by the size of the circle in Fig. 4. An adaptive bandwidth for the kernal is determined by how much data is in the neighborhood of the point being considered.

Statistical methods are well developed for regions of the histogram where there is a lot of data. Special considerations are needed for sparsely populated regions [4]. The expected maximum load range for the extrapolated histogram is estimated from a weibull fit to the large amplitude load ranges. This estimate is then used to determine an adaptive bandwidth for the sparse data regions of the histogram. Once the adaptive bandwidth is determined, the probability density of the entire histogram can be computed. The expected histogram for any desired total number of cycles is constructed by randomly placing cycles in the histogram with the appropriate probability. It should be noted that this process does not produce a unique extrapolation. Many extrapolations can be done with the same extrapolation factor so some information about the variability of the resulting loading history can be obtained.

Results for a 1000 times extrapolation of the loading history are shown in Fig. 5. It is easier to visualize the results of the extrapolation when the results are viewed in terms of the exceedance diagram given in Fig. 6. Two plots are shown in the figure. One from the data in Fig. 5 and another one representing a 1000 repetitions of the original loading history.

Figure 5. Extrapolated histogram

Table 1 gives the results of fatigue lives computed from various extrapolations of the original loading history. Fatigue lives represent the expected operating life of the structure in hours. A simple SN approach was employed in the calculations. Any convenient fatigue analysis technique may be used and can be combined with a probabilistic description of the material properties.

Table 1. Fatigue Lives

Figure 5. Extrapolated histogram

Table 1 gives the results of fatigue lives computed from various extrapolations of the original loading history. Fatigue lives represent the expected operating life of the structure in hours. A simple SN approach was employed in the calculations. Any convenient fatigue analysis technique may be used and can be combined with a probabilistic description of the material properties.

Table 1. Fatigue Lives

Figure 6. Distribution of cycles and fatigue damage

One effective method to visualize the damaging cycles is to plot the distribution of cycles and material behavior on the same scale as shown in Fig. 6. Material properties are scaled so that they have the same units as the loading history and this plot represents the expected fatigue life under constant amplitude loading. The point of tangency of the two curves gives an indication of the most damaging range of cycles. The maximum load range cycles are not the most damaging in this history, rather the most damaging cycles for this loading history are at about ½ of the maximum load range in the extrapolated histogram.

Figure 6. Distribution of cycles and fatigue damage

One effective method to visualize the damaging cycles is to plot the distribution of cycles and material behavior on the same scale as shown in Fig. 6. Material properties are scaled so that they have the same units as the loading history and this plot represents the expected fatigue life under constant amplitude loading. The point of tangency of the two curves gives an indication of the most damaging range of cycles. The maximum load range cycles are not the most damaging in this history, rather the most damaging cycles for this loading history are at about ½ of the maximum load range in the extrapolated histogram.

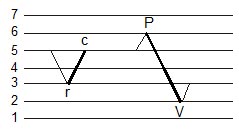

Figure 7. Inserting a VP cycle

An VP cycle ( r < c ) can be inserted into any PV reversal if c <= P and r > V. A VP cycle can not be inserted into a PV cycle of the same magnitude.

Figure 7. Inserting a VP cycle

An VP cycle ( r < c ) can be inserted into any PV reversal if c <= P and r > V. A VP cycle can not be inserted into a PV cycle of the same magnitude.

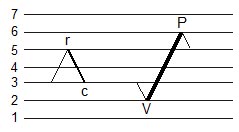

Figure 8. Insertion of a PV cycle

Figure 8 shows the insertion of a PV cycle. An PV cycle ( c < r ) can be inserted into any VP reversal if r < P and c >= V. A PV cycle can not be inserted into a VP cycle of the same magnitude.

These two simple rules provide the basis for rainflow reconstruction. The process starts with the largest cycle either PVP or VPV. The next largest cycle is then inserted in an appropriate location in the reconstructed time history. After the first few cycles, there are many possible locations to insert a smaller cycle. All possible insertion locations are determined and one is selected at random.

Figure 8. Insertion of a PV cycle

Figure 8 shows the insertion of a PV cycle. An PV cycle ( c < r ) can be inserted into any VP reversal if r < P and c >= V. A PV cycle can not be inserted into a VP cycle of the same magnitude.

These two simple rules provide the basis for rainflow reconstruction. The process starts with the largest cycle either PVP or VPV. The next largest cycle is then inserted in an appropriate location in the reconstructed time history. After the first few cycles, there are many possible locations to insert a smaller cycle. All possible insertion locations are determined and one is selected at random.